AI folks just love makin’ their language models sound more human-like, right? It’s like their (well…our) never-ending quest. But hey, who can blame them? Well, Retrieval Augmented Generation (RAG) databases hooked up to Large Language Models (LLMs) are looking like a major plot twist in AI. It’s getting kinda freaky how these AI systems are spitting out crazy accurate and relevant responses.

RAG databases are like giving these LLMs a VIP pass to a massive library of documents. We’re talking a ‘document retriever’ that sifts through mountains of info based on what the user asks, so the LLM can shoot back answers that are way more nuanced and relevant. Think of it like this: it’s like giving a chatbot a research team.

Remember the old-school LLMs? Getting those to sound even remotely human was like trying to teach a parrot quantum physics. But RAG LLMs? They’re different. They use these things called embeddings, which are basically like super-charged semantic analysis tools that help them understand and retrieve information with more accuracy. So yeah, they’re getting better at sounding less robotic.

And for businesses? Man, RAG LLMs are a potential goldmine. Imagine AI customer service reps that don’t sound like they’re reading from a script— because they kinda aren’t!. We’re talking personalized responses that actually make sense, all thanks to RAG.

Ever heard of the “Personality Vector” method? It’s wild. They mix context, tone, you name it, to cook up AI content that could fool even the most cynical user. Claude3 Opus, vector search, Perplexity AI — it’s all in the mix, crafting AI content that almost sounds like it was written by, you know, a real person.

But here’s the kicker: RAG LLMs are going way beyond just chatbots. Think bigger. Picture AI systems dishing out responses about complex topics, almost like they’ve got their own opinions (don’t worry, they’re not sentient…yet). This whole “inner monologue” thing they’re working on, where AI reflects on past interactions, could be a game changer.

And one more thing – RAG LLMs can adjust how they talk. Need something super detailed or a quick, informal answer? They’ve got you covered. This level of control is pretty much unheard of in AI language models, and honestly, it’s a big deal in the world of Natural Language Processing (NLP).

Enhancing AI Language Understanding with Transformer Models and Deep Learning

AI language models are constantly evolving (it’s like they never sleep!), and transformer models combined with deep learning are like rocket fuel for their language skills. Seriously, these technologies are shaking up Natural Language Processing (NLP). We’re talking AI that can chew through massive data sets and spit out insights with scary accuracy.

Transformer models, in particular, are the stars of the show. They’ve got this cool trick called ‘self-attention,’ which lets them process info lightning-fast and understand the context of a sentence like a pro. This means AI can finally understand the nuances of human language (well, almost).

And then there’s deep learning. It’s like giving AI a crash course in finding patterns. We’re talking teaching machines to see the connections in data that we might miss, leading to a more nuanced and, dare I say, ‘human’ understanding of language.

One of the coolest things about transformer models and deep learning is how they deal with long sentences. Remember the old recurrent neural networks (RNNs) that would freak out if a sentence was too long? Yeah, transformer models and deep learning don’t have that problem. They can handle any length, no sweat, which means more accurate responses. No more AI going off the rails!

So, how does this relate to our buddy RAG? Well, these technologies make RAG LLMs even more powerful. We’re talking responses that are not only accurate but also make sense in context. It’s getting harder to tell if you’re talking to a bot or a human these days (which is kinda creepy, but also impressive).

For example, transformer models and deep learning can crank out those question-and-answer pairs that AI models need for training. And because they can process so much data, these AI systems are learning faster and getting better at understanding the nuances of language.

But here’s the thing: AI isn’t just mimicking us anymore; it’s starting to ‘understand’ things on a different level. With vector representations of text, developers are building AI that can grasp complex concepts and ideas. It’s like they’re forming their own opinions, which is both exciting and a little unnerving.

The Power of Vector Search and Perplexity AI in Creating Human-Like Text

AI is getting scary good at sounding like us, and it’s all thanks to fancy tech like vector search. In a nutshell, it helps AI decode the hidden meaning behind our words. We’re talking semantic relationships, people! This means AI can grasp those subtle connections in language that make us, well, human.

Think of vector search as a secret decoder ring for RAG LLMs. By turning text into vectors (which are kinda like numerical maps of words), AI can spot patterns and relationships that would make your average dictionary blush. This leads to more accurate and relevant answers. No more AI going off on random tangents!

But hold on, there’s more. Perplexity AI is a fantastic tool to make sure the information is relevant and timely. Remember those early chatbots that would hallucinate absolutely convincing, yet completely factually incorrect responses? With Perplexity AI’s API, you can make sure your RAG chatbot has all the context it needs to ensure that doesn’t happen.

That’s because Perplexity’s LLMs are connected to web-search functionality, so any query can be cross-referenced with the internet. That’s much like communicating with a human…right? When you don’t fully know the answer to a question, you (or I) will check Google first. Well, tools like Perplexity extend the same ability to the AI you’re chatting with.

The result? RAG LLMs that don’t just parrot back information but can actually hold a conversation. They can understand the nuances of your questions, respond in a way that makes sense, and even tailor their responses to your specific needs. It’s like having a personal assistant that lives inside your computer.

With vector search and Perplexity AI leading the charge, the future of AI language models is looking pretty bright (or should I say, intelligent?). As these technologies grow and evolve, we can expect AI that not only understands us but can communicate with us on a whole new level. The possibilities are mind-boggling, and the line between human and machine is getting blurrier by the day.

Leveraging RAG Databases to Generate Contextually Relevant and Accurate Responses

RAG databases are like giving AI the ultimate cheat sheet. We’re talking a massive pool of information that LLMs can tap into for more accurate and relevant answers. It’s like having access to a library that never closes and has a book on, well, whatever subjects you wish.

Imagine this: you ask a question, and instead of giving you a generic answer, the AI dives headfirst into its RAG database, pulls out all the relevant info, and hits you with a response that’s tailored to your specific needs. That’s the power of RAG databases, my friend. No more vague or irrelevant answers!

But here’s where RAG databases really shine: in specialized areas where you need in-depth knowledge. Need a complex technical question answered? RAG databases can hook AI up with the experts (or at least their writings) to deliver the goods. It’s like having a team of specialists on speed-dial, ready to tackle even the most obscure topics.

And it’s not just about having a ton of information; it’s about understanding it. RAG databases help AI grasp the nuances of human language, the subtle differences in meaning that make all the difference. Like, you wouldn’t use the same language talking to your grandma as you would your buddies, right? These RAG systems get that.

With RAG LLMs, this translates to more human-like responses. These AI systems aren’t just spitting out canned responses. They’re using RAG databases to understand the context of your question, your intent, and even your tone, to craft responses that are both informative and engaging. Creepy? Maybe a little. Impressive? Absolutely.

As RAG databases evolve, we’re gonna see LLMs that are even more accurate, relevant, and downright human-like in their communication. The future of AI is looking pretty bright (and chatty!), and RAG databases are leading the charge.

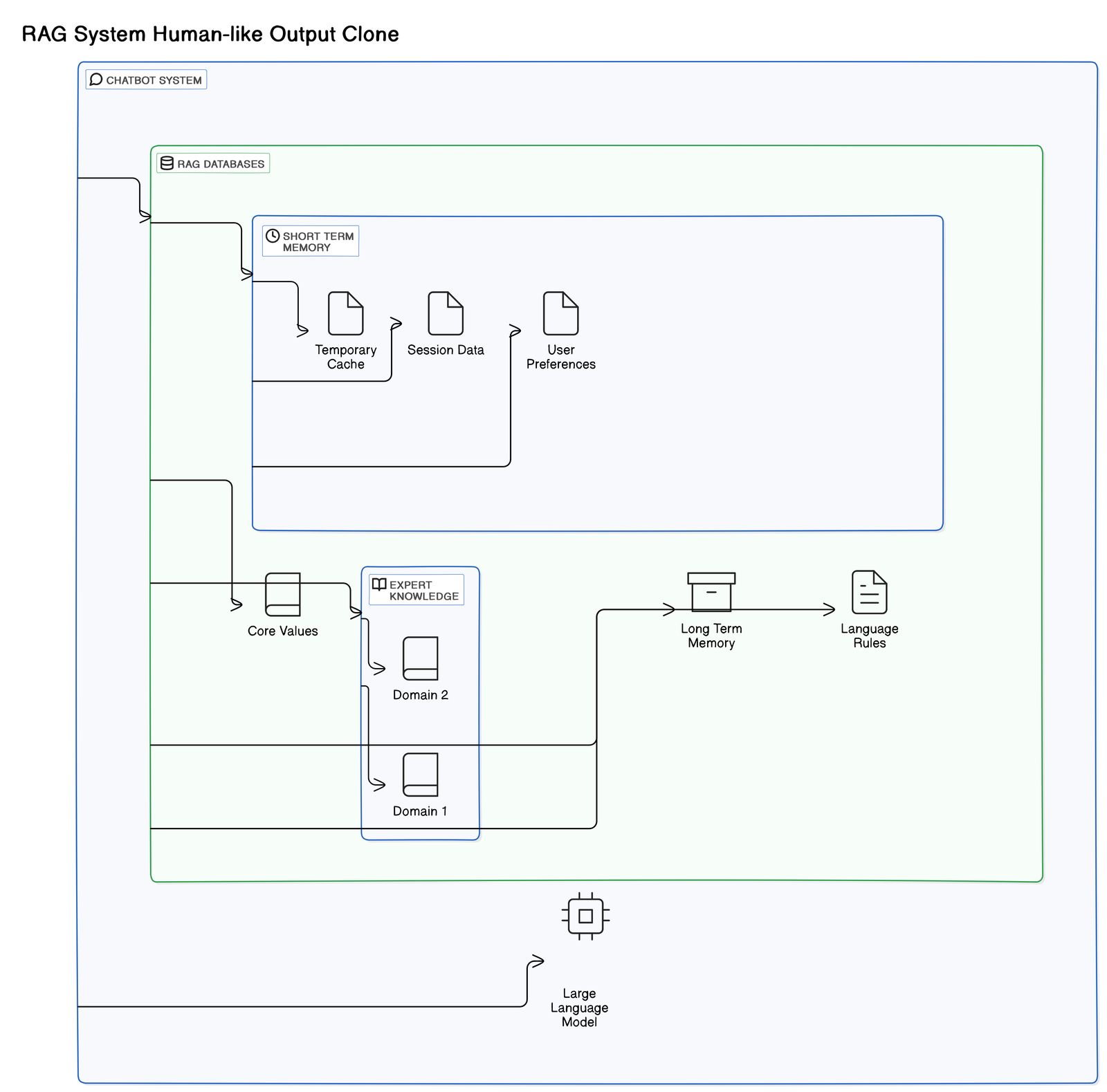

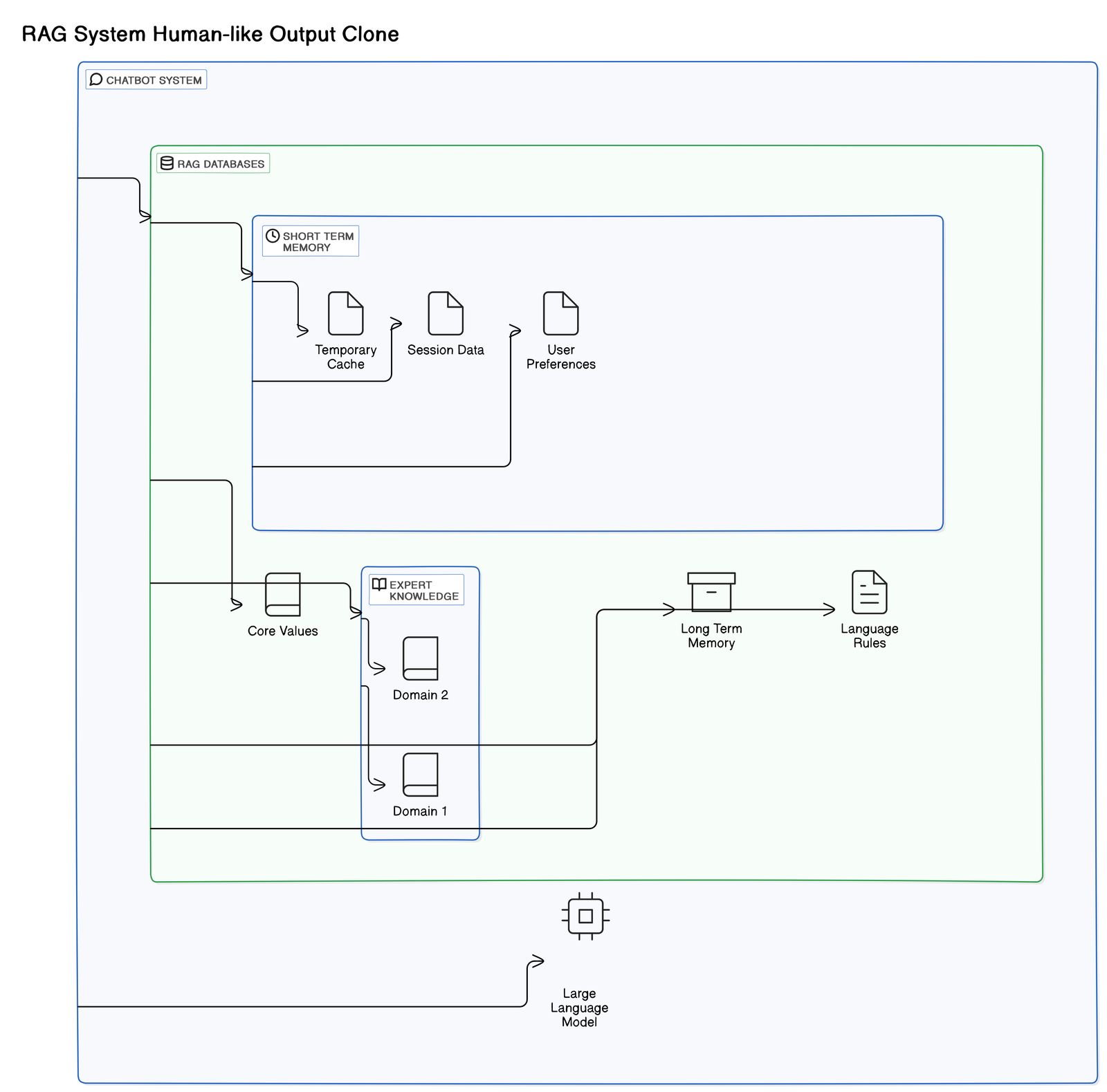

Architecting a System for Human-Like Output: Combining Values, Expert Knowledge, and Web Search

AI engineers here at A.I. Plus Automation are like architects, always trying to build the most sophisticated communication interfaces possible. And let’s be real, they’ve stepped up their game. We’re talking AI systems that don’t just process information, they practically breathe it. One way they’re doing this is by merging values, expert knowledge, and web search into one powerful system. It’s like giving AI a brain, a library card, and a moral compass all at the same time.

Think about it: AI that not only understands the literal meaning of your words but also the intent behind them. It’s like having a conversation with someone who gets where you’re coming from (and maybe even challenges you a little). This is especially critical in fields like customer service, healthcare, or finance, where accuracy and understanding can make all the difference. Nobody wants a robot telling them the wrong treatment or giving shaky financial advice, right?

Here’s why this combo is so potent: values inject a dose of, well, values. Expert knowledge brings the brains to the table. And web search ensures AI is always up-to-date on the latest and greatest info. It’s like having a team of researchers working around the clock to make sure the AI stays sharp.

But the coolest part? These AI systems are built to learn and adapt. Every interaction, every piece of feedback, is a chance for them to refine their understanding and improve their responses. It’s like they’re constantly leveling up, becoming more sophisticated with each passing day.

As these AI architectures evolve, we’re gonna see LLMs that can hold their own in even the most complex discussions. Imagine AI systems that can debate philosophy, provide insightful business advice, or write a killer screenplay. Okay, maybe not that last one (yet), but you get the idea. The future of AI is full of possibilities, and these advanced architectures are paving the way.

Transforming Business Communications with RAG Databases: A New Era of AI-Generated Content

RAG databases are about to shake things up in the biz world, and yeah, I’m talking about a serious communication makeover. We’re talking AI-powered customer service that doesn’t make you wanna tear your hair out. Imagine chatbots that actually get you, answer your questions like a pro, and maybe even crack a joke or two (a little AI humor never hurt anyone).

Here’s the deal: RAG databases give businesses a secret weapon— AI that can decode even the most convoluted customer questions. No more robotic responses or endless transfers to human agents. RAG-powered AI can handle those tricky queries with grace and finesse, making customers feel heard (and maybe even a little impressed).

And it doesn’t stop there. Picture this: AI crafting killer marketing campaigns that resonate with your target audience. We’re talking personalized messages, tailored content, and maybe even a few strategically placed memes (gotta stay relevant, right?). With RAG databases, the possibilities for AI-powered communication are practically endless.

So, businesses better buckle up. RAG databases are about to unleash a new era of AI-generated content, and frankly, it’s about time. Get ready for smarter, more engaging, and (dare I say) delightful interactions. The future of business communication is here, and it’s powered by RAG.