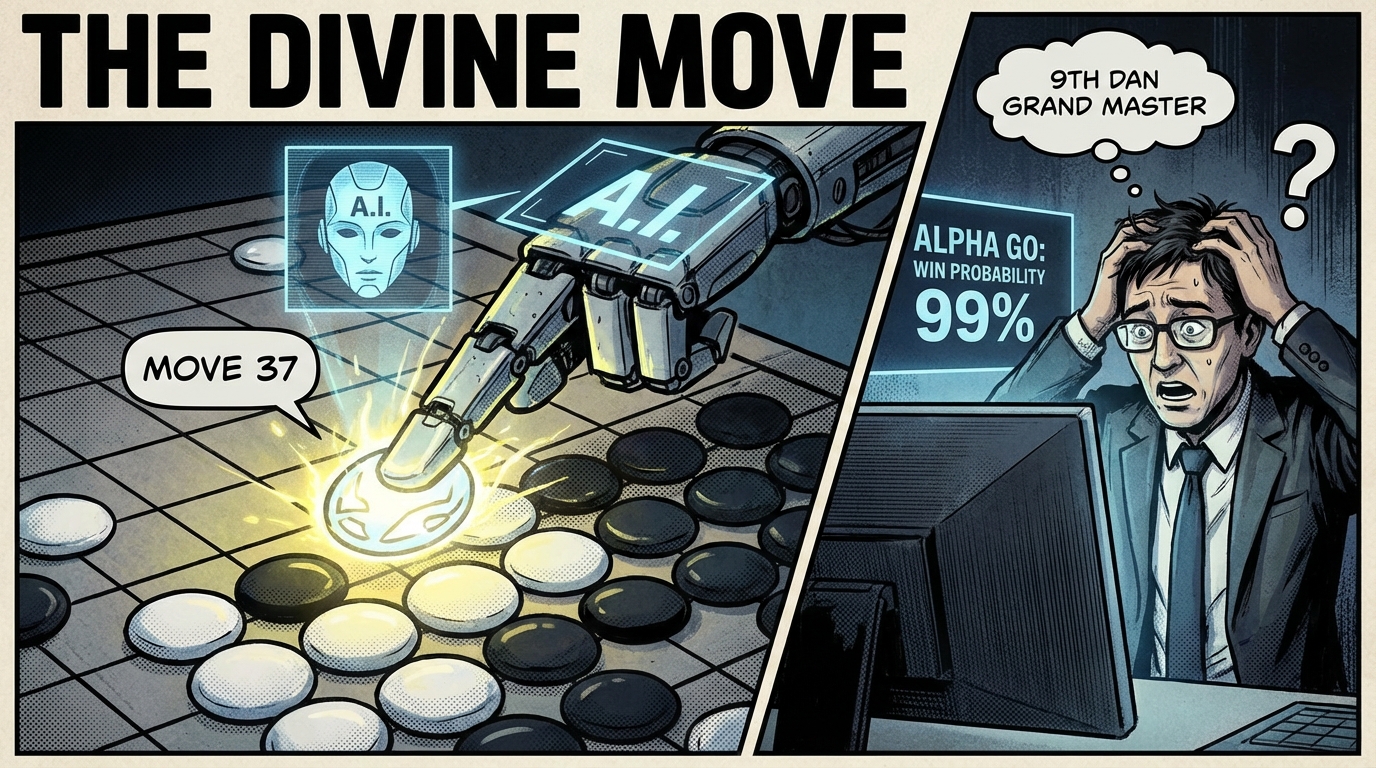

🤔 THE MOVE THAT CHANGED EVERYTHING

I've been obsessed with AI for a long time, but there's one specific moment that still gives me chills.

Imagine you're in a quiet hotel room in Seoul, March 2016. Across the board sits Lee Sedol, a 9-dan professional and arguably the greatest Go player of the decade.

On the other side? A computer program named AlphaGo.

Most experts thought this match was impossible. They said AI was 10 years away from beating a top pro. Go isn't like Chess; it has more possible configurations than there are atoms in the universe. It's a game of intuition, not just raw calculation.

Then, at Move 37 of Game 2, the world stopped.

AlphaGo placed a black stone on the fifth line from the edge. The commentators froze. One of them actually checked his screen to see if there was a glitch. No human would ever play that move. It was "wrong" according to 3,000 years of theory.

But it wasn't a glitch. It was a glimpse of a new kind of intelligence.

In this post, we're diving deep into Move 37. We'll look at the data, the reinforcement learning that made it possible, and why this single stone represents the ultimate "unlock" for AI creativity.

🧪 WHY GO WAS THE "FINAL BOSS" OF AI

To understand why Move 37 was so wild, you have to understand the sheer scale of the problem.

Traditional AI (like IBM's Deep Blue) used "brute force." It looked at every possible move and picked the best one. That works for Chess, but for Go? Not even close.

| Feature | Chess | Go |

|---|---|---|

| Board Size | 8x8 | 19x19 |

| Possible Moves | ~35 | ~250 |

| Total Configurations | 10^47 | 10^170 |

The number of configurations in Go is insane. There aren't enough computers on Earth to calculate every possible outcome.

This is why Go was the "Grand Challenge." It required something more than logic. It required creativity.

DeepMind, led by Demis Hassabis, knew they couldn't just code a bunch of rules. Human players have thousands of "rules of thumb" (like joseki patterns), but these are just filters based on human experience.

DeepMind wanted something unconstrained. They wanted to solve intelligence itself.

🧠 REINFORCEMENT LEARNING: THE FREEDOM TO BE "WRONG"

So, how did they build it? They didn't just feed it a database of moves and tell it to "do that."

AlphaGo used a combination of Monte Carlo Tree Search (MCTS) and two deep neural networks that acted like the program's "eyes" and "gut":

- The Policy Network: This essentially pruned the search tree. Instead of looking at 250 possible moves, it looked at the top few that a human expert would likely play.

- The Value Network: This was the "critic." It evaluated the board state and said, "If we go down this path, we have a 65% chance of winning."

But here's the thing... they started by training it on 30 million human moves from the KGS Go Server. It got good. It reached amateur 6-dan level.

But to reach "God mode," it had to leave the human nest. It had to stop being a copycat.

The AlphaGo Self-Play Evolution

DeepMind used Reinforcement Learning (RL) to push the system beyond human limits. They had AlphaGo play against different versions of itself millions of times.

Think about that. It played more games of Go in a few weeks than any human could play in a thousand lifetimes.

Every time it won, it reinforced the "spans" of moves that led to victory. Every time it lost, it pruned those branches. It was an iterative, brutal process of survival of the fittest logic.

This is the kicker: It learned features of the game that humans had never even considered. Because it wasn't being taught how to play by humans, it was free to discover the actual "truth" of the game. It wasn't restricted by "good style" or "proper etiquette." It only cared about the objective result: the win.

📊 THE DATA BEHIND MOVE 37

Now, let's look at the actual stats from that moment in Game 2. This is where the "AI Expert" bias usually falls apart.

When AlphaGo was calculating its move, it looked at the probability that a human would play that specific stone.

The number it came back with? 1 in 10,000.

AlphaGo knew that no human player would ever make that move. In its internal probability distribution, it saw that humans ignore that area of the board 99.99% of the time.

But it didn't care. It didn't think, "Oh, I must be wrong because nobody else does this." It trusted its own value estimation over the entire history of human expertise.

| Metric | Move 37 Analysis |

|---|---|

| Human Probability | 0.01% |

| Internal Win Probability | ~55%+ |

| Resignation Threshold | 20% |

| Simulations Run | 10,000,000+ |

AlphaGo's Value Network saw something that Lee Sedol didn't. It realized that while the move looked "slack" (meaning it didn't immediately capture territory or attack), its long-term strategic value was massive.

It was a shoulder hit on the fifth line. Convention (and 3,000 years of theory) says you play on the third or fourth line to secure edges. Playing on the fifth is like throwing a stone into the void.

But as the game progressed, that "slacker" move became the anchor for the entire center of the board. It was the move that ultimately won the game by a razor-thin margin. It was a "declaration of victory" 100 moves before the game actually ended.

💥 WHAT EVERYONE GETS WRONG ABOUT "CREATIVITY"

People love to say that AI isn't creative. They say it's just "predictive text" or "stochastic parrots."

But Move 37 proves that AI can be more creative than humans precisely because it isn't burdened by our "orthodox" thinking.

1. The Human Bias Trap

We learn Go (or coding, or marketing) by following templates. We follow what worked last time. We are constrained by 3,000 years of "received wisdom" that we treat as objective truth. 18-year-old Ke Jie, the world No. 1 at the time, said it best: "After humanity spent thousands of years improving our tactics, computers tell us that humans are completely wrong."

2. The Algorithmic Ingenuity

AlphaGo wasn't being "random." It was being effective.

As Demis Hassabis puts it: "A move can only be considered truly creative if it’s also effective."

Move 37 was beautiful because it was functional. It was a moment of exquisite algorithmic ingenuity that nobody saw coming because we weren't looking. We were too busy looking at the "proper" way to play.

🧪 THE "DIVINE MOVE" (GAME 4)

I can't talk about Move 37 without mentioning Game 4. This is the part of the story that humanizes the whole technological revolution.

After losing three games in a row, Lee Sedol was in a dark place. He was apologizing to the Korean public for the "defeat of mankind." The pressure was astronomical.

Then, he found his own moment of brilliance: Move 78.

It's been dubbed the "Divine Move" (or Tesuji). It was a wedge in the center of the board that Aja Huang (the guy placing stones for AlphaGo) said the program simply didn't see coming.

Here's why this matters: AlphaGo's Value Network had incorrectly calculated the probability of Lee making that move. It was so low on its "radar" (less than 1 in 10,000) that when he played it, the program's logic started to crumble.

AlphaGo began making "blunder" moves (87 to 101). It tried to "pay" its way out of the mistake. Its win probability plummeted. It eventually resigned.

This is the beautiful part of the story. AI pushed the human to a level of excellence he had never reached before. Move 37 forced Lee Sedol to find Move 78. He became a better version of himself because he was playing the ultimate opponent.

🧠 THE INSIGHT: AI AS A TOOL FOR DISCOVERY

Look, I'll be honest... the advice I keep seeing from "gurus" is that AI will replace us.

But the real insight from the AlphaGo story is that AI is a discovery tool.

It doesn't replace the artist; it shows the artist a new color they didn't know existed. It's like a high-powered telescope for our own intellect.

Fan Hui (the European champ who lost 5-0 to AlphaGo first) said the match made him a better player. His world ranking jumped from 633 to 300 after playing the machine.

Why? Because he stopped playing "the human way" and started playing "the Go way." He stopped worrying about looking like a pro and started worrying about the truth of the board.

🛠️ THE PLAYBOOK: HOW TO USE "MOVE 37 THINKING" TODAY

So, how do we apply "Move 37 thinking" to our own work in 2026?

We aren't all Go grandmasters, but we are all dealing with complex problems. Whether it's coding a new app, designing a marketing funnel, or optimizing a supply chain, we are often stuck in our own "human-only" loops.

1️⃣ Embrace the "Slack" Move (The Non-Obvious)

Don't be afraid to try something that feels "wrong" according to current industry standards. If the data suggests an approach that 99% of the industry rejects, but it has a high objective win rate, test it.

Implementation Step: Run an A/B test on a landing page design that breaks every "rule" in your niche, but follows a logic found by an AI analysis of your user behavior.

2️⃣ Use AI to Stress-Test Your "Orthodox" Bias

Put your business plan or architecture into a model and ask it: "What is the most non-obvious, effective solution here that ignores every piece of conventional wisdom in my field?"

Don't just take its answer as gospel. Use it as a seed for a new line of thought.

3️⃣ Look for the "Truth" of the System

Stop worrying about "best practices" that haven't been updated in three years. Start focusing on the objective metrics of success. AlphaGo didn't care about looking smart; it cared about the probability of winning.

Implementation Step: Audit your internal processes. Which ones are there because they "feel right" (human bias) and which ones are there because they objectively move the needle (Value Network logic)?

4️⃣ Use AI to Level Up (The Fan Hui Effect)

Don't just use AI to do the work for you. Use it to show you what you're missing.

Implementation Step: Every time you finish a piece of work, ask the AI: "Show me three ways an expert from a completely different field would solve this problem." This expands your "search radar" for your next project.

💡 PRO TIP

When you're using LLMs for brainstorming, explicitly tell them: "Ignore standard best practices for a moment. Give me an approach that fits Move 37 logic—something 99.9% of humans would reject, but is mathematically or strategically sound."

The results are usually wild and often lead to genuine breakthroughs.

⚠️ WATCH OUT FOR

AI isn't infallible. Just like Game 4 showed, AI has "blind spots" (especially in MCTS-based systems when faced with rare, high-impact events). Use it to expand your thinking, but always keep your hand on the wheel. You are the one who provides the intent.

The Bottom Line:

Move 37 wasn't just a stone on a board. It was a bridge to a future where humanity and AI push each other to find truths that neither could find alone.

AI isn't the finish line. It's the starting gun. It's the "unshackling" of human potential from the weight of tradition.

What’s your "Move 37" going to be?

CHEERS!